Anchor link Requirements

Microservices in the DADI platform are built on Node.js, a JavaScript runtime built on Google Chrome's V8 JavaScript engine. Node.js uses an event-driven, non-blocking I/O model that makes it lightweight and efficient.

DADI follows the Node.js LTS (Long Term Support) release schedule, and as such the version of Node.js required to run DADI products is coupled to the version of Node.js currently in Active LTS. See the LTS schedule for further information.

Anchor link Creating an API

The easiest way to install API is using DADI CLI. CLI is a command line application that can be used to create and maintain installations of DADI products. Follow the simple instructions below, or see more detailed documentation for DADI CLI.

Anchor link Create new API installation

There are two ways to create a new API with the CLI: either manually create a new directory for API or let CLI handle that for you. DADI CLI accepts an argument for project-name which it uses to create a directory for the installation.

Manual directory creation

$ mkdir my-api

$ cd my-api

$ npx dadi-cli api new

Automatic directory creation

$ npx dadi-cli api new my-api

$ cd my-api

DADI CLI will install the latest version of API and copy a set of files to your chosen directory so you can launch API almost immediately.

Installing DADI API directly from NPM

All DADI platform microservices are also available from NPM. To add API to an existing project as a dependency:

$ cd my-existing-node-app

$ npm install --save @dadi/api

Anchor link Application anatomy

When CLI finishes creating your API, the application directory will contain the basic requirements for launching your API. The following directories and files have been created for you:

my-api/

config/ # contains environment-specific configuration files

config.development.json

server.js # the entry point for the application

package.json

workspace/

collections/ # collection specification files

endpoints/ # custom JavaScript endpoints

Anchor link Configuration

API reads a series of configuration parameters to define its behaviour and to adapt to each environment it runs on. These parameters are defined in JSON files placed inside the config/ directory, named as config.{ENVIRONMENT}.json, where {ENVIRONMENT} is the value of the NODE_ENV environment variable. In practice, this allows you to have different configuration parameters for when API is running in development, production and any staging, QA or anything in between, as per the requirements of your development workflow.

Some configuration parameters also have corresponding environment variables, which will override whatever value is set in the configuration file.

The following table shows a list of all the available configuration parameters.

| Path | Description | Environment variable | Default | Format |

|---|---|---|---|---|

app.name |

The applicaton name | N/A | DADI API Repo Default |

String |

publicUrl.host |

The host of the URL where the API instance can be publicly accessed at | URL_HOST |

|

* |

publicUrl.port |

The port of the URL where the API instance can be publicly accessed at | URL_PORT |

|

* |

publicUrl.protocol |

The protocol of the URL where the API instance can be publicly accessed at | URL_PROTOCOL |

http |

String |

server.host |

Accept connections on the specified address. If the host is omitted, the server will accept connections on any IPv6 address (::) when IPv6 is available, or any IPv4 address (0.0.0.0) otherwise. | HOST |

|

* |

server.port |

Accept connections on the specified port. A value of zero will assign a random port. | PORT |

8081 |

Number |

server.redirectPort |

Port to redirect http connections to https from | REDIRECT_PORT |

|

port |

server.protocol |

The protocol the web application will use | PROTOCOL |

http |

String |

server.sslPassphrase |

The passphrase of the SSL private key | SSL_PRIVATE_KEY_PASSPHRASE |

|

String |

server.sslPrivateKeyPath |

The filename of the SSL private key | SSL_PRIVATE_KEY_PATH |

|

String |

server.sslCertificatePath |

The filename of the SSL certificate | SSL_CERTIFICATE_PATH |

|

String |

server.sslIntermediateCertificatePath |

The filename of an SSL intermediate certificate, if any | SSL_INTERMEDIATE_CERTIFICATE_PATH |

|

String |

server.sslIntermediateCertificatePaths |

The filenames of SSL intermediate certificates, overrides sslIntermediateCertificate (singular) | SSL_INTERMEDIATE_CERTIFICATE_PATHS |

|

Array |

datastore |

The name of the NPM module to use as a data connector for collection data | N/A | @dadi/api-mongodb |

String |

auth.tokenUrl |

The endpoint for bearer token generation | N/A | /token |

String |

auth.tokenTtl |

Number of seconds that bearer tokens are valid for | N/A | 1800 |

Number |

auth.clientCollection |

Name of the collection where clientId/secret pairs are stored | N/A | clientStore |

String |

auth.tokenCollection |

Name of the collection where bearer tokens are stored | N/A | tokenStore |

String |

auth.datastore |

The name of the NPM module to use as a data connector for authentication data | N/A | @dadi/api-mongodb |

String |

auth.database |

The name of the database to use for authentication | DB_AUTH_NAME |

test |

String |

auth.cleanupInterval |

The interval (in seconds) at which the token store will delete expired tokens from the database | N/A | 3600 |

Number |

auth.saltRounds |

The difficulty factor used when hashing client secrets (lower values mean hashes are faster to generate but easier to crack) | N/A | 10 |

Number |

caching.ttl |

Number of seconds that cached items are valid for | N/A | 300 |

Number |

caching.directory.enabled |

If enabled, cache files will be saved to the filesystem | N/A | true |

Boolean |

caching.directory.path |

The relative path to the cache directory | N/A | ./cache/api |

String |

caching.directory.extension |

The extension to use for cache files | N/A | json |

String |

caching.directory.autoFlush |

If true, cached files that are older than the specified TTL setting will be automatically deleted | N/A | true |

Boolean |

caching.directory.autoFlushInterval |

Interval to run the automatic flush mechanism, if enabled in autoFlush |

N/A | 60 |

Number |

caching.redis.enabled |

If enabled, cache files will be saved to the specified Redis server | REDIS_ENABLED |

|

Boolean |

caching.redis.host |

The Redis server host | REDIS_HOST |

127.0.0.1 |

String |

caching.redis.port |

The port for the Redis server | REDIS_PORT |

6379 |

port |

caching.redis.password |

The password for the Redis server | REDIS_PASSWORD |

|

String |

logging.enabled |

If true, logging is enabled using the following settings. | N/A | true |

Boolean |

logging.level |

Sets the logging level. | N/A | info |

debug or info or warn or error or trace |

logging.path |

The absolute or relative path to the directory for log files. | N/A | ./log |

String |

logging.filename |

The name to use for the log file, without extension. | N/A | api |

String |

logging.extension |

The extension to use for the log file. | N/A | log |

String |

logging.accessLog.enabled |

If true, HTTP access logging is enabled. The log file name is similar to the setting used for normal logging, with the addition of \"access\". For example api.access.log. |

N/A | true |

Boolean |

logging.accessLog.kinesisStream |

An AWS Kinesis stream to write to log records to. | KINESIS_STREAM |

|

String |

paths.collections |

The relative or absolute path to collection specification files | N/A | workspace/collections |

String |

paths.endpoints |

The relative or absolute path to custom endpoint files | N/A | workspace/endpoints |

String |

paths.hooks |

The relative or absolute path to hook specification files | N/A | workspace/hooks |

String |

feedback |

If true, responses to DELETE requests will include a count of deleted and remaining documents, as opposed to an empty response body | N/A | |

Boolean |

status.enabled |

If true, status endpoint is enabled. | N/A | |

Boolean |

status.routes |

An array of routes to test. Each route object must contain properties route and expectedResponseTime. |

N/A | |

Array |

query.useVersionFilter |

If true, the API version parameter is extracted from the request URL and passed to the database query | N/A | |

Boolean |

media.defaultBucket |

The name of the default media bucket | N/A | mediaStore |

String |

media.buckets |

The names of media buckets to be used | N/A | |

Array |

media.tokenSecret |

The secret key used to sign and verify tokens when uploading media | N/A | catboat-beatific-drizzle |

String |

media.tokenExpiresIn |

The duration a signed token is valid for. Expressed in seconds or a string describing a time span (https://github.com/zeit/ms). Eg: 60, \"2 days\", \"10h\", \"7d\" | N/A | 1h |

* |

media.storage |

Determines the storage type for uploads | N/A | disk |

disk or s3 |

media.basePath |

Sets the root directory for uploads | N/A | workspace/media |

String |

media.pathFormat |

Determines the format for the generation of subdirectories to store uploads | N/A | date |

none or date or datetime or sha1/4 or sha1/5 or sha1/8 |

media.s3.accessKey |

The S3 access key used to connect to S3 | AWS_S3_ACCESS_KEY |

|

String |

media.s3.secretKey |

The S3 secret key used to connect to S3 | AWS_S3_SECRET_KEY |

|

String |

media.s3.bucketName |

The name of the AWS S3 or Digital Ocean Spaces bucket in which to store uploads | AWS_S3_BUCKET_NAME |

|

String |

media.s3.region |

The S3 region | AWS_S3_REGION |

|

String |

media.s3.endpoint |

The S3 endpoint, required for accessing a Digital Ocean Space | String | ||

env |

The applicaton environment. | NODE_ENV |

development |

String |

cluster |

If true, API runs in cluster mode, starting a worker for each CPU core | N/A | |

Boolean |

cors |

If true, responses will include headers for cross-domain resource sharing | N/A | |

Boolean |

internalFieldsPrefix |

The character to be used for prefixing internal fields | N/A | _ |

String |

databaseConnection.maxRetries |

The maximum number of times to reconnection attempts after a database fails | N/A | 10 |

Number |

i18n.defaultLanguage |

ISO-639-1 code of the default language | N/A | en |

String |

i18n.languages |

List of ISO-639-1 codes for the supported languages | N/A | [] |

Array |

i18n.fieldCharacter |

Special character to denote a translated field | N/A | : |

String |

search.enabled |

If true, API responds to collection /search endpoints and will index content | N/A | false |

Boolean |

search.minQueryLength |

Minimum search string length | N/A | 3 |

Number |

search.wordCollection |

The name of the datastore collection that will hold tokenized words | N/A | words |

String |

search.datastore |

The datastore module to use for storing and querying indexed documents | N/A | @dadi/api-mongodb |

String |

search.database |

The name of the database to use for storing and querying indexed documents | DB_SEARCH_NAME |

search |

String |

Anchor link Authentication

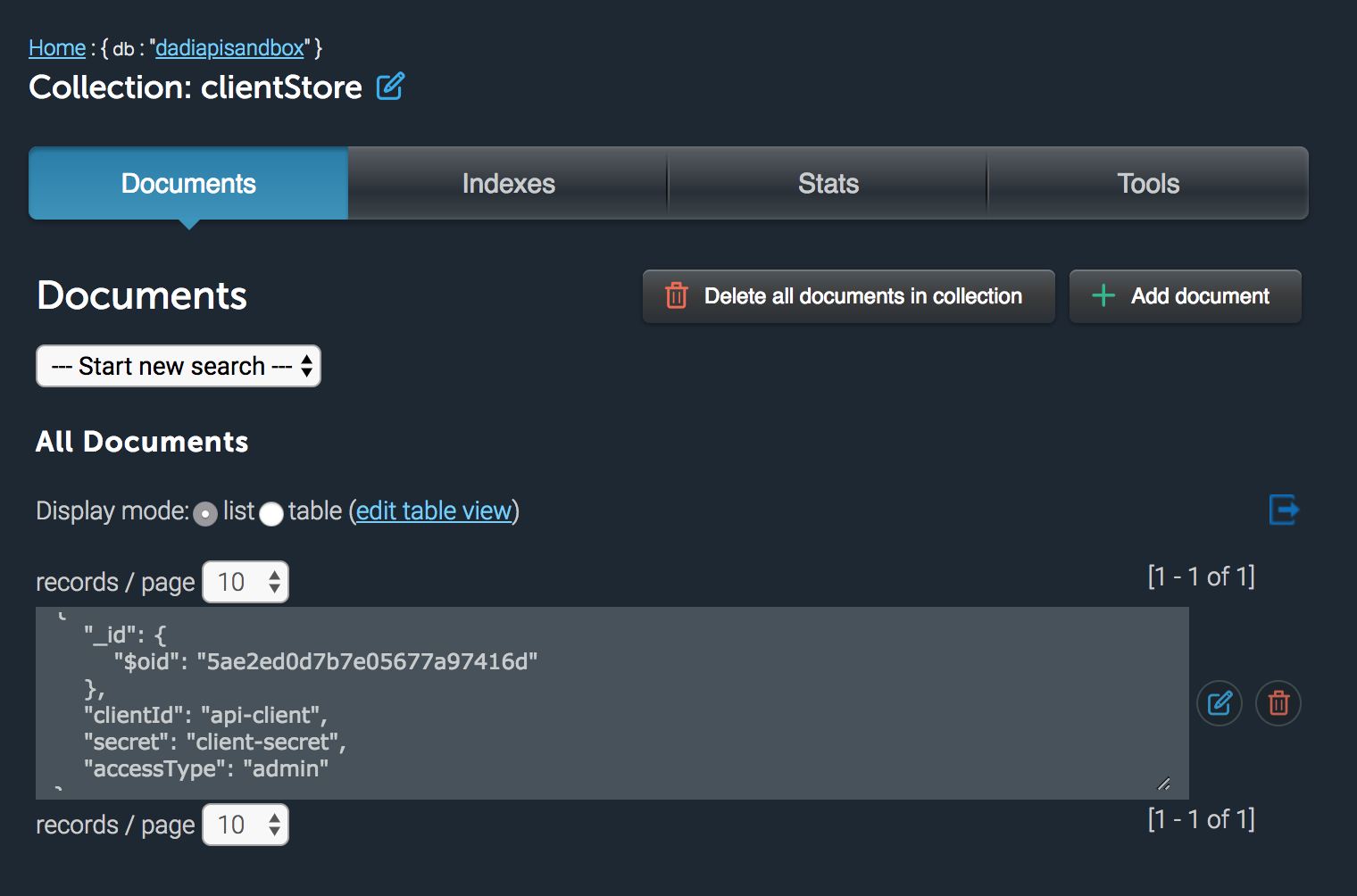

DADI API provides a full-featured authentication layer based on the Client Credentials flow of oAuth 2.0. Consumers must exchange a set of client credentials for a temporary access token, which must be appended to API requests.

A client is represented as a set of credentials (ID + secret) and an access type, which can be set to admin or user. If set to admin, the client can perform any operation in API without any restrictions. If not, they will be subject to the rules set in the access control list.

Anchor link Adding clients

If you've installed DADI CLI you can use that to create a new client in the database. See instructions for Adding clients with CLI.

Alternatively, use the built in NPM script to start the Client Record Generator which will present you with a series of questions about the new client and insert a record into the configured database.

$ npm explore @dadi/api -- npm run create-client

Creating the client in the correct database

To ensure the correct database is used for your environment, add an environment variable to the command:

$ NODE_ENV=production npm explore @dadi/api -- npm run create-client

Anchor link Obtaining an access token

Obtain an access token by sending a POST request to your API's token endpoint, passing your client credentials in the body of the request. The token endpoint is configurable using the property auth.tokenRoute, with a default value of /token.

POST /token HTTP/1.1

Content-Type: application/json

Host: api.somedomain.tech

Connection: close

Content-Length: 65

{

"clientId": "my-client-key",

"secret": "my-client-secret"

}

With a request like the above, you should expect a response containing an access token, as below:

HTTP/1.1 200 OK

Content-Type: application/json

Cache-Control: no-store

Content-Length: 95

{

"accessToken": "4172bbf1-0890-41c7-b0db-477095a288b6",

"tokenType": "Bearer",

"expiresIn": 3600,

"accessType": "admin"

}

Anchor link Using an access token

Once you have an access token, each request to the API should include an Authorization header containing the token:

GET /1.0/library/books HTTP/1.1

Content-Type: application/json

Authorization: Bearer 4172bbf1-0890-41c7-b0db-477095a288b6

Host: api.somedomain.tech

Connection: close

Anchor link Access token expiry

The response returned when requesting an access token contains a property expiresIn which is set to the number of seconds the access token is valid for. When this period has elapsed, API automatically invalidates the access token and a subsequent request to API using that access token will return an invalid token error.

The consumer application must request a new access token to continue communicating with the API.

Anchor link Internal collections

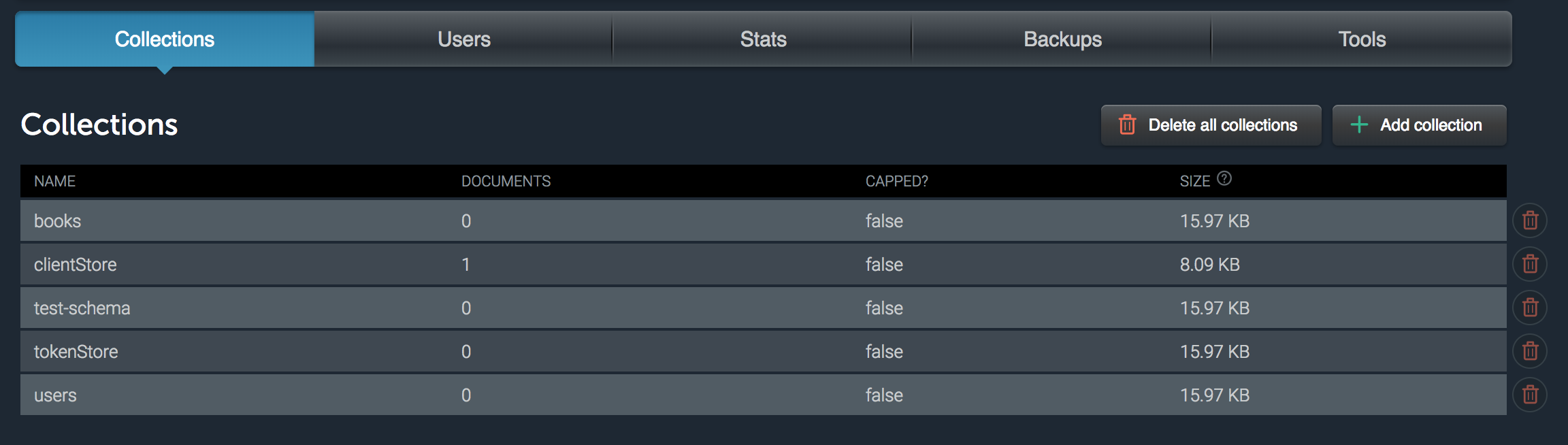

Internally, API uses three collections to store authentication data:

clientStore: stores API clientsroleStore: stores API rolesaccessStore: stores aggregate data computed from clients, roles and permissions

The names for these collections can be configured using the auth.clientCollection, auth.roleCollection and auth.accessCollection configuration properties, respectively. But unless they happen to clash with the name of one of your collections, you don't need to worry about setting them.

Anchor link Collection authentication

By default, collections require all requests to be authenticated and authorised. This behaviour can be changed on a per-collection basis by changing the authenticate property in the collection settings block, which can be set to:

| Value | Description | Example |

|---|---|---|

true (default) |

Authentication is required for all HTTP verbs | true |

false |

Authentication is not required for any HTTP verb, making the collection fully accessible to anyone | false |

| Array | Authentication is required only for some HTTP verbs, making the remaining verbs accessible to anyone | ["PUT", "DELETE"] |

The following configuration for a collection will allow all GET requests to proceed without authentication, while POST, PUT and DELETE requests will require authentication.

"settings": {

"authenticate": ["POST", "PUT", "DELETE"]

}

See more information about collection specifications and their configuration.

Anchor link Authentication errors

API responds with HTTP 401 Unauthorized errors when either the supplied credentials are incorrect or an invalid token has been provided. The WWW-Authenticate header indicates the nature of the error. In the case of an expired access token, a new one should be requested.

Anchor link Invalid credentials

HTTP/1.1 401 Unauthorized

WWW-Authenticate: Bearer, error="invalid_credentials", error_description="Invalid credentials supplied"

Content-Type: application/json

content-length: 18

Date: Sun, 17 Sep 2017 17:44:48 GMT

Connection: close

Anchor link Invalid or expired token

HTTP/1.1 401 Unauthorized

WWW-Authenticate: Bearer, error="invalid_token", error_description="Invalid or expired access token"

Content-Type: application/json

content-length: 18

Date: Sun, 17 Sep 2017 17:46:28 GMT

Connection: close

Anchor link Access control

API includes a fully-fledged access control list (ACL) that makes it possible to specify in fine detail what each API client has permissions to do.

ACL terminology

The access control list specifies which clients can access the various resources of an API instance. Clients can have permissions assigned to them directly, or via roles, which can in their turn extend other roles.

Anchor link Resources

A resource is any entity in API that requires some level of authorisation to be accessed, like a collection or a custom endpoint. Resources are identified by a unique key with the following formats.

| Key format | Description | Example |

|---|---|---|

clients |

Access to API clients | clients |

collection:{DB}_{NAME} |

Access to the collection named NAME in the database DB |

collection:library_book |

endpoint:{VERSION}_{NAME} |

Access to the custom endpoint named NAME and version VERSION |

endpoint:v1_full-book |

media:{NAME} |

Access to the media bucket named NAME |

media:photos |

roles |

Access to API roles | roles |

To specify what permissions someone has over a resource, an access matrix is used. It consists of an object that maps each of the CRUD methods (create, read, update and delete) to a value that determines whether that operation is allowed or not.

For example, the following matrices specify that on the library/book collection, the given client can read any document and update their own documents, whereas in the library/author collection they can create, read and update any documents, being limited to deleting only the documents they have created.

{

"resources": {

"collection:library_book": {

"create": false,

"delete": false,

"deleteOwn": false,

"read": true,

"readOwn": false,

"update": false,

"updateOwn": true

},

"collection:library_author": {

"create": true,

"delete": false,

"deleteOwn": true,

"read": true,

"readOwn": false,

"update": true,

"updateOwn": false

}

}

}

The table below shows all the CRUD methods supported.

| Method | Description |

|---|---|

create |

Permission to create new instances of the resource |

delete |

Permission to delete instances of the resource |

deleteOwn |

Permission to delete instances of the resource that have been created by the requesting client |

read |

Permission to read instances of the resource |

readOwn |

Permission to read instances of the resource that have been created by the requesting client |

update |

Permission to update instances of the resource |

updateOwn |

Permission to update instances of the resource that have been created by the requesting client |

Anchor link Advanced permissions for collection resources

When setting up the access matrix for a collection resource, it's possible to define finer-grained permissions that limit access to a subset of the fields or to documents that match a certain query.

To do this, the Boolean value that determines whether access is granted (true) or denied (false) gives way to an object that can contain one or both of the following properties:

fields: Limits the fields that the client has access to. Uses the MongoDB projection format, meaning that fields can be included or excluded, but not both.Example:

{ "read": { "fields": { "title": 1, "author": 1 } } }filter: Limits access to documents that match a given query. Supports any filtering operator.Example:

{ "read": { "filter": { "category": { "$in": ["art", "architecture"] } } } }

The following table shows how each of these properties is interpreted by the various access types.

| Method | fields |

filter |

|---|---|---|

create |

N/A | N/A |

delete |

N/A | Controls which documents can be deleted |

deleteOwn |

N/A | Controls which documents can be deleted |

read |

Controls which fields will be displayed | Controls which documents can be read |

readOwn |

Controls which fields will be displayed | Controls which documents can be read |

update |

Controls which fields can be updated | Controls which documents can be updated |

updateOwn |

Controls which fields can be updated | Controls which documents can be updated |

Anchor link Resources API

The Resources API provides a read-only endpoint for listing all the registered resources.

GET

/api/resources

Find all resources

Returns a list of all the registered resources

Parameters

No parameters

Responses

| Code | Description |

|---|---|

| 200 | Successful operation

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

Anchor link Clients

Clients represent users or applications that wish to interact with API. When not given administrator privileges (i.e. {"accessType": "admin"} in the database record), clients are subject to permissions defined in the access control list.

Anchor link Creating a client

The Clients API makes it possible to create a client using a RESTful endpoint, as long as the requesting client has create access to the clients resource or has administrator access.

Creating admin clients

For security reasons, it's not possible to create clients with administrator access via the Clients API. If you need to create one, see the manual method of adding a client, using either the DADI CLI or the

create-clientscript.

Request

POST /api/clients HTTP/1.1

Content-Type: application/json

Authorization: Bearer c389340b-718f-4eed-8e8e-3400a1c6cd5a

{

"clientId": "eduardo",

"secret": "squirrel"

}

Response

HTTP/1.1 200 OK

Content-Type: application/json

{

"results": [

{

"clientId": "eduardo",

"accessType": "user",

"resources": {},

"roles": []

}

]

}

The resources property in a client record shows the resources they have access to. By default, a client doesn't have access to anything until explicitly given the right permissions.

Let's see how we can give this client access to some resources.

Anchor link Assigning permissions

The Clients API includes a set of RESTful endpoints to manage the resources that a client has access to. The following request would give a client full permissions to access the library/book collection.

Request

POST /api/clients/eduardo/resources HTTP/1.1

Content-Type: application/json

Authorization: Bearer c389340b-718f-4eed-8e8e-3400a1c6cd5a

{

"name": "collection:library_book",

"access": {

"create": true,

"delete": true,

"read": true,

"update": true

}

}

Response

HTTP/1.1 200 OK

Content-Type: application/json

{

"results": [

{

"clientId": "eduardo",

"accessType": "user",

"resources": {

"collection:library_book": {

"create": true,

"delete": true,

"deleteOwn": false,

"read": true,

"readOwn": false,

"update": true,

"updateOwn": false

}

},

"roles": []

}

]

}

At this point, eduardo can request an access token and access the library/book collection.

Anchor link Adding data to client records

The Clients API allows developers to associate arbitrary data with client records. This can be used by consumer applications to store data like personal information, user preferences or any type of metadata.

This data is stored in an object called data within the client record, and it can be written to when a client is created (via a POST request) or at any point afterwards via an update (PUT request). See the Clients API specification for more details.

When updating a client, the data object in the request body is processed as a partial update, which means the following in relation to any existing data object associated with the record:

- New properties will be appended to the existing data object;

- Properties with the same name as those in existing data object will be replaced;

- Properties set to

nullwill be removed from the data object.

Example 1 (creating a client with data):

POST /api/clients HTTP/1.1

Content-Type: application/json

Authorization: Bearer c389340b-718f-4eed-8e8e-3400a1c6cd5a

{

"clientId": "eduardo",

"secret": "sssshhh!",

"data": {

"firstName": "Eduardo"

}

}

Example 2 (adding data to an existing client):

PUT /api/clients/eduardo HTTP/1.1

Content-Type: application/json

Authorization: Bearer c389340b-718f-4eed-8e8e-3400a1c6cd5a

{

"data": {

"lastName": "Boucas"

}

}

Example 3 (removing a data property from a client):

PUT /api/clients/eduardo HTTP/1.1

Content-Type: application/json

Authorization: Bearer c389340b-718f-4eed-8e8e-3400a1c6cd5a

{

"data": {

"firstName": null

}

}

Data properties prefixed with an underscore (e.g.

_userId) can only be set and modified by admin clients, working as read-only properties for normal clients.

Anchor link Clients API

The Clients API provides a set of RESTful endpoints that allow the creation and management of clients, as well as granting and revoking access to resources and roles.

POST

/api/clients

Create a client

Creates a new client. The requesting client must have `create` access to the `clients` resource, or have an `accessType` of `admin`. Optionally, an arbitrary data object can be set using the `data` property.

Parameters

No parameters

Request body

-

Content type: application/json

Property Type clientId string secret string data object { "clientId": "eduardo", "secret": "squirrel", "data": { "firstName": "Eduardo" } }

Responses

| Code | Description |

|---|---|

| 201 | Client added successfully; the created client is returned with the secret omitted

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 409 | A client with the given ID already exists |

GET

/api/clients

Find all clients

Returns an array of client records, with `secret` omitted.

Parameters

No parameters

Responses

| Code | Description |

|---|---|

| 200 | Successful operation; the clients are returned with the secret omitted. Response includes the roles granted and resources they have access to.

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

GET

/api/clients/{clientId}

Find a client by ID

Returns a single client

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | ID of client to return | Yes |

Responses

| Code | Description |

|---|---|

| 200 | Successful operation; the client is returned with the secret omitted. Response includes the roles granted and resources they have access to.

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No client found with the given ID |

PUT

/api/clients/{clientId}

Update an existing client

Updates a client. It is not possible to change a client ID, as it is immutable. It is also not possible to change any resources or roles using this endpoint – the resources and roles endpoints should be used for that effect. For a non-admin client to update their own secret, they must include the current secret in the request payload.

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | ID of client to update | Yes |

Request body

-

Content type: application/json

Property Type data object currentSecret string secret string { "data": { "firstName": "Eduardo" }, "currentSecret": "current-secret", "secret": "new-secret" }

Responses

| Code | Description |

|---|---|

| 200 | Successful operation; the client is returned with the secret omitted. Response includes the roles granted and resources they have access to.

Example:

|

| 400 | To update the client secret, the current secret must be supplied via the `currentSecret` property / The supplied current secret is not valid |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No client found with the given ID |

DELETE

/api/clients/{clientId}

Delete an existing client

Deletes a client.

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | Yes |

Responses

| Code | Description |

|---|---|

| 204 | Successful operation |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No client found with the given ID |

GET

/api/client

Find the current client

Returns the client associated with the bearer token present in the request

Parameters

No parameters

Responses

| Code | Description |

|---|---|

| 200 | Successful operation; the client is returned with the secret omitted. Response includes the roles granted and resources they have access to.

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

PUT

/api/client

Updates the current client

Updates the client associated with the bearer token present in the request. It is not possible to change a client ID, as it is immutable. It is also not possible to change any resources or roles using this endpoint – the resources and roles endpoints should be used for that effect. For a non-admin client to update their own secret, they must include the current secret in the request payload.

Parameters

No parameters

Request body

-

Content type: application/json

Property Type data object currentSecret string secret string { "data": { "firstName": "Eduardo" }, "currentSecret": "current-secret", "secret": "new-secret" }

Responses

| Code | Description |

|---|---|

| 200 | Successful operation; the client is returned with the secret omitted. Response includes the roles granted and resources they have access to.

Example:

|

| 400 | To update the client secret, the current secret must be supplied via the `currentSecret` property / The supplied current secret is not valid |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

POST

/api/clients/{clientId}/roles

Assign roles to an existing client

The request body should contain an array of roles to assign to the specified client.

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | The Client to assign Roles to | Yes |

Request body

-

Content type: application/json

Property Type N/A array [ "employee" ]

Responses

| Code | Description |

|---|---|

| 200 | Role added to Client successfully; the updated client is returned with the secret omitted

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No client found with the given ID |

DELETE

/api/clients/{clientId}/roles/{roleName}

Unassign role from an existing client

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | The client that is being unassigned the specified Role | Yes |

| roleName | string (path) | The name of the role to unassign | Yes |

Responses

| Code | Description |

|---|---|

| 204 | Successful operation |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | Client not found or role not assigned to client |

POST

/api/clients/{clientId}/resources

Give an existing client permissions to access a resource

The request body should contain an object mapping access types to either a Boolean (granting or revoking that access type) or an object specifying field-level permissions and/or permission filters

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | Yes |

Request body

-

Content type: application/json

Property Type name string access object { "name": "collection:library_book", "access": { "create": true, "delete": false, "deleteOwn": true, "read": true, "readOwn": false, "update": false, "updateOwn": true } }

Responses

| Code | Description |

|---|---|

| 200 | Resource added to Client successfully; the updated client is returned with the secret omitted

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No client found with the given ID |

DELETE

/api/clients/{clientId}/resources/{resourceId}

Revoke an existing client's permission for the specified resource

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | Yes | |

| resourceId | string (path) | Yes |

Responses

| Code | Description |

|---|---|

| 204 | Access revoked successfully; the updated client is returned with the secret omitted |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | Client not found or resource not assigned to client |

PUT

/api/clients/{clientId}/resources/{resourceId}

Update an existing clients' resource permissions

The request body should contain an object mapping access types to either a Boolean (granting or revoking that access type) or an object specifying field-level permissions and/or permission filters

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| clientId | string (path) | Yes | |

| resourceId | string (path) | Yes |

Request body

-

Content type: application/json

Property Type create boolean | object delete boolean | object deleteOwn boolean | object read boolean | object readOwn boolean | object update boolean | object updateOwn boolean | object { "create": true, "delete": false, "deleteOwn": true, "read": true, "readOwn": false, "update": false, "updateOwn": true }

Responses

| Code | Description |

|---|---|

| 200 | Resource updated successfully; the updated client is returned with the secret omitted

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | Client not found or resource not assigned to client |

Anchor link Roles

A role is a group of users that share a set of permissions to access a list of resources. In practice, it's an alternative way of giving permissions to clients.

For example, imagine that you wanted to give clients C1 and C2 a set of permissions to access resource R. You could either grant permissions to that resource individually to each client record, or you could grant the permissions to a role and assign it to both clients.

Anchor link Reconciling client and role permissions

A client may have their own resource permissions as well as permissions given by roles. Whenever a clash occurs, i.e. permissions for the same resource given directly and from a role, the access matrices are merged so that the broadest set of permissions is obtained.

For example, imagine that a client has the following access matrices for a given resource, one assigned directly and the other resulting from a role.

Matrix 1

{

"create": false,

"delete": true,

"deleteOwn": false,

"read": {

"filter": {

"fieldOne": "valueOne"

}

},

"readOwn": false,

"update": {

"fields": {

"fieldOne": 1

}

},

"updateOwn": false

}

Matrix 2

{

"create": true,

"delete": false,

"deleteOwn": true,

"read": true,

"readOwn": false,

"update": {

"fields": {

"fieldTwo": 1,

"fieldThree": 1

}

},

"updateOwn": false

}

Resulting matrix

{

"create": true,

"delete": true,

"deleteOwn": true,

"read": true,

"readOwn": false,

"update": {

"fields": {

"fieldOne": 1,

"fieldTwo": 1,

"fieldThree": 1

}

},

"updateOwn": false

}

Anchor link Extending roles

Roles can extend (or inherit from) other roles. If role R1 extends role R2, then clients with R1 will get the permissions granted by that role plus any permissions granted by R2. The inheritance chain can go on indefinitely.

Role inheritance is a good way to represent hierarchy typically present in organisations. For example, you could create a manager role that extends an employee role, since managers can usually do all the operations available to employees plus some of their own.

Anchor link Roles API

The Roles API provides a set of RESTful endpoints that allow the creation and management of roles, including granting and revoking access to resources.

POST

/api/roles

Create a new role

The body must contain a `name` property with the name of the role to create. Optionally, it may also contain an `extends` property that specifies the name of a role to be extended

Parameters

No parameters

Request body

-

Content type: application/json

Property Type name string extends string { "name": "manager", "extends": "employee" }

Responses

| Code | Description |

|---|---|

| 201 | Successful operation

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 409 | Role already exists |

GET

/api/roles/{roleName}

Find a role by name

Returns a single role

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| roleName | string (path) | The name of the Role to return | Yes |

Responses

| Code | Description |

|---|---|

| 200 | Successful operation

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No role found with the given name |

PUT

/api/roles/{roleName}

Update an existing role

The request body may contain an optional object that specifies a role to be extended via the `extends` property; if that property is set to `null`, the inheritance relationship will be removed

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| roleName | string (path) | The name of the Role to update | Yes |

Request body

-

Content type: application/json

Property Type extends string { "extends": "employee" }

Responses

| Code | Description |

|---|---|

| 200 | Successful operation

Example:

|

| 400 | The role being extended does not exist |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No role found with the given name |

DELETE

/api/roles/{roleName}

Delete an existing Role

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| roleName | string (path) | The name of the Role to delete | Yes |

Responses

| Code | Description |

|---|---|

| 204 | Successful operation |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No role found with the given name |

POST

/api/roles/{roleName}/resources

Give an existing role permissions to access a resource

The request body should contain an object mapping access types to either a Boolean (granting or revoking that access type) or an object specifying field-level permissions and/or permission filters

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| roleName | string (path) | Yes |

Request body

-

Content type: application/json

Property Type name string access object { "name": "collection:library_book", "access": { "create": true, "delete": false, "deleteOwn": true, "read": true, "readOwn": false, "update": false, "updateOwn": true } }

Responses

| Code | Description |

|---|---|

| 200 | Resource added to role successfully; the updated role is returned

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | No role found with the given name |

DELETE

/api/roles/{roleName}/resources/{resourceId}

Revoke an existing role's permission for the specified resource

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| roleName | string (path) | Yes | |

| resourceId | string (path) | Yes |

Responses

| Code | Description |

|---|---|

| 204 | Access revoked successfully; the updated role is returned |

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | Role not found or resource not assigned to role |

PUT

/api/roles/{roleName}/resources/{resourceId}

Update an existing role's resource permissions

The request body should contain an object mapping access types to either a Boolean (granting or revoking that access type) or an object specifying field-level permissions and/or permission filters

Parameters

| Name | Type | Description | Required |

|---|---|---|---|

| roleName | string (path) | Yes | |

| resourceId | string (path) | Yes |

Request body

-

Content type: application/json

Property Type create boolean | object delete boolean | object deleteOwn boolean | object read boolean | object readOwn boolean | object update boolean | object updateOwn boolean | object { "create": true, "delete": false, "deleteOwn": true, "read": true, "readOwn": false, "update": false, "updateOwn": true }

Responses

| Code | Description |

|---|---|

| 200 | Resource updated successfully; the updated role is returned

Example:

|

| 401 | Access token is missing or invalid |

| 403 | The client performing the operation doesn’t have appropriate permissions |

| 404 | Role not found or resource not assigned to role |

Anchor link Using models directly

It's possible to tap into the access control list programmatically, which is useful when creating custom JavaScript endpoints or collection hooks. The ACL models allow you to create and modify clients and roles, as well as compute the permissions associated with a client and determine whether they can access a given resource.

The @dadi/api NPM module exports an ACL object with a series of public methods:

- access.get()

- client.create()

- client.delete()

- client.get()

- client.resourceAdd()

- client.resourceRemove()

- client.resourceUpdate()

- client.roleAdd()

- client.roleRemove()

- client.update()

- role.create()

- role.delete()

- role.get()

- role.resourceAdd()

- role.resourceRemove()

- role.resourceUpdate()

- role.update()

Anchor link access.get

Returns the access matrix representing the permissions of a given client to access a resource.

It expects the ID of the client as well as their access type, which means you may need to obtain this information with a separate query first. The reason for this is that the client ID + access type pair are encoded in the bearer token's JWT and are easily available via the req.dadiApiClient property.

Receives:

client.clientId(type:String): ID of the clientclient.accessType(type:String): Access type of the clientresource(type:String): ID of the resourceresolveOwnTypes(type:Boolean, default:true): Whether to translate*Owntypes (e.g.readOwn) into areadproperty with a filter

Returns:

Promise<Object>: an object representing an access matrix.

Example:

const ACL = require('@dadi/api').ACL

ACL.access.get({

clientId: 'restfulJohn',

accessType: 'user'

}, 'collection:v1_foobar').then(access => {

if (!access.read) {

console.log('Client does not have `read` access!')

}

})

Anchor link client.create

Creates a new client.

Note that all clients created using the ACL model have an access type of user. To create a client with an access type of admin, you must do so manually.

Receives (named parameters):

clientId(type:String): ID of the client to createsecret(type:String): The client secret

Returns:

Promise<Object>: an object representing the newly-created client.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.create({

clientId: 'restfulJohn',

secret: 'superSecret!'

})

Anchor link client.delete

Deletes a client.

Receives:

clientId(type:String): ID of the client to delete

Returns:

Promise with:

{deletedCount, totalCount}(Object): Object indicating the number of clients that were deleted and the number of clients remaining after the operation

Example:

const ACL = require('@dadi/api').ACL

ACL.client.delete('restfulJohn')

Anchor link client.get

Returns a client by ID.

If secret is passed as a second argument, only a client that matches both the ID and the secret supplied will be retrieved.

Receives:

clientId(type:String): ID of the clientsecret(type:String, optional): The client secret

Returns:

Promise<Object>: an object with a results property containing an array of matching clients.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.get({

clientId: 'restfulJohn',

secret: 'superSecret!'

}).then(({results}) => {

if (results.length === 0) {

return console.log('Wrong credentials!')

}

console.log(results[0])

})

Anchor link client.resourceAdd

Gives a client permission to access a given resource.

Receives:

clientId(type:String): ID of the clientresource(type:String): ID of the resourceaccess(type:Object): Access matrix

Returns:

Promise<Object>: the updated client.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.resourceAdd(

'restfulJohn',

'collection:v1_things',

{create: true, read: true}

)

Anchor link client.resourceRemove

Removes a client's permission to access a given resource.

Receives:

clientId(type:String): ID of the clientresource(type:String): ID of the resource

Returns:

Promise<Object>: the updated client.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.resourceRemove(

'restfulJohn',

'collection:v1_things'

)

Anchor link client.resourceUpdate

Updates a client's permission to access a given resource.

Receives:

clientId(type:String): ID of the clientresource(type:String): ID of the resourceaccess(type:Object): New access matrix

Returns:

Promise<Object>: the updated client.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.resourceAdd(

'restfulJohn',

'collection:v1_things',

{create: false, update: true}

)

Anchor link client.roleAdd

Assigns roles to a client.

Receives:

clientId(type:String): ID of the clientroles(type:Array<String>): Names of roles to assign

Returns:

Promise<Object>: the updated client.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.roleAdd(

'restfulJohn',

['operator', 'administrator']

)

Anchor link client.roleRemove

Unassigns roles from a client.

Receives:

clientId(type:String): ID of the clientroles(type:Array<String>): Names of roles to unassign

Returns:

Promise<Object>: the updated client.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.roleRemove(

'restfulJohn',

['operator', 'administrator']

)

Anchor link client.update

Updates a client.

Receives:

clientId(type:String): ID of the client to updateupdate(type:Object): The update object

Returns:

Promise<Object>: an object representing the updated client.

Example:

const ACL = require('@dadi/api').ACL

ACL.client.update(

'restfulJohn',

{secret: 'newSuperSecret!'}

})

Anchor link role.create

Creates a new role.

Receives (named parameters):

name(type:String): Name of the roleextends(type:String, optional): The name of a role to be extended

Returns:

Promise<Object>: an object representing the newly-created role.

Example:

const ACL = require('@dadi/api').ACL

ACL.role.create({

name: 'administrator',

extends: 'operator'

})

Anchor link role.delete

Deletes a role. If the role is extended by other roles, their extends property will be set to null.

Receives:

name(type:String): Name of the role to be deleted

Returns:

Promise with:

{deletedCount, totalCount}(Object): Object indicating the number of roles that were deleted and the number of roles remaining after the operation

Example:

const ACL = require('@dadi/api').ACL

ACL.role.delete('operator')

Anchor link role.get

Returns roles by name.

Receives:

names(type:Array<String>): Names of the roles to retrieve

Returns:

Promise<Object>: an object with a results property containing an array of matching roles.

Example:

const ACL = require('@dadi/api').ACL

ACL.role.get(['operator', 'administrator'])

Anchor link role.resourceAdd

Gives a role permission to access a given resource.

Receives:

role(type:String): Name of the roleresource(type:String): ID of the resourceaccess(type:Object): Access matrix

Returns:

Promise<Object>: the updated role.

Example:

const ACL = require('@dadi/api').ACL

ACL.role.resourceAdd(

'operator',

'collection:v1_things',

{create: true, read: true}

)

Anchor link role.resourceRemove

Removes a role's permission to access a given resource.

Receives:

role(type:String): Name of the roleresource(type:String): ID of the resource

Returns:

Promise<Object>: the updated role.

Example:

const ACL = require('@dadi/api').ACL

ACL.role.resourceRemove(

'operator',

'collection:v1_things'

)

Anchor link role.resourceUpdate

Updates a role's permission to access a given resource.

Receives:

role(type:String): Name of the roleresource(type:String): ID of the resourceaccess(type:Object): New access matrix

Returns:

Promise<Object>: the updated role.

Example:

const ACL = require('@dadi/api').ACL

ACL.role.resourceAdd(

'operator',

'collection:v1_things',

{create: false, update: true}

)

Anchor link role.update

Updates a role.

Receives:

role(type:String): Name of the roleupdate(type:Object): The update object

Returns:

Promise<Object>: an object representing the updated role.

Example:

const ACL = require('@dadi/api').ACL

ACL.role.update(

'superAdministrator',

{extends: 'administrator'}

})

Anchor link Collections

A Collection represents data within your API. Collections can be thought of as the data models for your application and define how API connects to the underlying data store to store and retrieve data.

API can handle the creation of new collections or tables in the configured data store simply by creating collection specification files. To connect collections to existing data, simply name the file using the same name as the existing collection/table.

All that is required to connect to your data is a collection specification file, and once that is created API provides data access over a REST endpoint and programmatically via the API's Model module.

Anchor link Collections directory

Collection specifications are simply JSON files stored in your application's collections directory. The location of this directory is configurable using the configuration property paths.collections but defaults to workspace/collections. The structure of this directory is as follows:

my-api/

workspace/

collections/

1.0/ # API version

library/ # database

collection.books.json # collection specification file

API Version

Specific versions of your API are represented as sub-directories of the collections directory. Versioning of collections and endpoints acts as a formal contract between the API and its consumers.

Imagine a situation where a breaking change needs to be introduced — e.g. adding or removing a collection field, or changing the output format of an endpoint. A good way to handle this would be to introduce the new structure as version 2.0 and retain the old one as version 1.0, warning consumers of its deprecation and potentially giving them a window of time before the functionality is removed.

All requests to collection and custom endpoints must include the version in the URL, mimicking the hierarchy defined in the folder structure.

Database

Collection documents may be stored in separate databases in the underlying data store, represented by the name of the "database" directory.

Note This feature is disabled by default. To enable separate databases in your API the configuration setting

database.enableCollectionDatabasesmust betrue. See Collection-specific Databases for more information.

Collection specification file

A collection specification file is a JSON file containing at least one field specification and a configuration block.

The naming convention for collection specifications is collection.<collection name>.json where <collection name> is used as the name of the collection/table in the underlying data store.

Use the plural form

We recommend you use the plural form when naming collections in order to keep consistency across your API. Using the singular form means a GET request for a list of results can easily be confused with a request for a single entity.

For example, a collection named

book (collection.book.json)will accept GET requests at the following endpoints:https://api.somedomain.tech/1.0/library/book https://api.somedomain.tech/1.0/library/book/560a44b33a4d7de29f168ce4It's not obvious whether or not the first example is going to return all books, as intended. Using the plural form makes it clear what the endpoint's intended behaviour is:

https://api.somedomain.tech/1.0/library/books https://api.somedomain.tech/1.0/library/books/560a44b33a4d7de29f168ce4

Anchor link The Collection Endpoint

API automatically generates a REST endpoint for each collection specification loaded from the collections directory. The format of the REST endpoint follows this convention /{version}/{database}/{collection name} and matches the structure of the collections directory.

my-api/

workspace/

collections/

1.0/ # API version

library/ # database

collection.books.json # collection specification file

With the above directory structure API will generate this REST endpoint: https://api.somedomain.tech/1.0/library/books.

Anchor link The JSON File

Collection specification files can be created and edited in any text editor, then added to the API's collections directory. API will load all valid collections when it boots.

Anchor link Minimum Requirements

The JSON file must contain a fields property and, optionally, a settings property.

fields: must contain at least one field specification. See Collection Fields for the format of fields.settings: API uses sensible defaults for collection configuration, but these can be overridden using properties in thesettingsblock. See Collection Settings for details.

A skeleton collection specification

{

"fields": {

"field1": {

}

},

"settings": {

}

}

Anchor link Collection Fields

Each field in a collection is defined using the following format. The only required property is type which tells API what data types the field can contain.

A basic field specification

"fieldName": {

"type": "String"

}

A complete field specification

"fieldName": {

"type": "String",

"required": true,

"label": "Title",

"comments": "The title of the entry",

"example": "War and Peace",

"message": "must not be blank",

"default": "Untitled"

"matchType": "exact",

"validation": {

"minLength": 4,

"maxLength": 20,

"regex": {

"pattern": "/[A-Za-z0-9]*/"

}

}

}

| Property | Description | Default | Example | Required? |

|---|---|---|---|---|

| fieldName | The name of the field | "title" |

Yes | |

| type | The type of the field. Possible values "String", "Number", "DateTime", "Boolean", "Mixed", "Object", "Reference" |

N/A | "String" |

Yes |

| label | The label for the field | "" |

"Title" |

No |

| comments | The description of the field | "" |

"The article title" |

No |

| example | An example value for the field | "" |

"War and Peace" |

No |

| validation | Validation rules, including minimum and maximum length and regular expression patterns. | {} |

No | |

| validation.minLength | The minimum length for the field. | unlimited | 4 |

No |

| validation.maxLength | The maximum length for the field. | unlimited | 20 |

No |

| validation.regex | A regular expression the field's value must match | { "pattern": /[A-Z]*/ } |

No | |

| required | If true, a value must be entered for the field. | false |

true |

No |

| message | The message to return if field validation fails. | "is invalid" |

"must contain uppercase letters only" |

No |

| default | An optional value to use as a default if no value is supplied for this field | "0" |

No | |

| matchType | Specify the type of query that is performed when using this field. If "exact" then API will attempt to match the query value exactly, otherwise it will performa a case-insensitive query. | "exact" |

No | |

| format | Used by some fields (e.g. DateTime) to specify the expected format for input/output |

null |

"YYYY-MM-DD" |

No |

Anchor link Field Types

Every field in a collection must be one of the following types. All documents sent to API are validated against a collection's field type to ensure that data will be stored in the format intended. See the section on Validation for more details.

| Type | Description | Example |

|---|---|---|

| String | The most basic field type, used for text data. Will also accept arrays of Strings. | "The quick brown fox", ["The", "quick", "brown", "fox"] |

| Number | Accepts numeric data types including whole integers and floats | 5, 5.01 |

| DateTime | Stores dates/times. Accepts numeric values (Unix timestamp), strings in the ISO 8601 format or in any format supported by Moment.js as long as the pattern is defined in the format property of the field schema. Internally, values are always stored as Unix timestamps. |

"2018-04-27T13:18:15.608Z", 1524835111068 |

| Boolean | Accepts only two possible values: true or false |

true |

| Object | Accepts single JSON documents or an array of documents | { "firstName": "Steve" } |

| Mixed | Can accept any of the above types: String, Number, Boolean or Object | |

| Reference | Used for linking documents in the same collection or a different collection, solving the problem of storing subdocuments in documents. See Document Composition (reference fields) for further information. | the ID of another document as a String: "560a5baf320039f7d6a78d3b" |

Anchor link Collection settings

Each collection specification can contain a settings. API applies sensible defaults to collections, all of which can be overridden using properties in the settings block. Collection configuration is controlled in the following way:

{

"settings": {

"cache": true,

"authenticate": true,

"count": 100,

"sort": "title",

"sortOrder": 1,

"callback": null,

"storeRevisions": false

"index": []

}

}

| Property | Description | Default | Example |

|---|---|---|---|

| displayName | A friendly name for the collection. Used by the auto-generated documentation plugin. | n/a | "Articles" |

| cache | If true, caching is enabled for this collection. The global config must also have cache: true for caching to be enabled |

false |

true |

| authenticate | Specifies whether requests for this collection require authentication, or if there only certain HTTP methods that must be authenticated | true |

false, ["POST"] |

| count | The number of results to return when querying the collection | 50 |

100 |

| sort | The field to sort results by | "_id" |

"title" |

| sortOrder | The sort direction to sort results by | 1 |

1 = ascending, -1 = descending |

| enableVersioning | Whether to store a new document revision for each update/delete operation | true |

false |

| versioningCollection | The name of the collection used to hold revision documents | The collection name with the string "Versions" appended |

"authorsVersions" |

| callback | Name of a function to use as a JSONP callback | null |

setAuthors |

| defaultFilters | Specifies a default query for the collection. A filter parameter passed in the querystring will extend these filters. |

{} |

{ "published": true } |

| fieldLimiters | Specifies a list of fields for inclusion/exclusion in the response. Fields can be included or excluded, but not both. See Retrieving data for more detail. | {} |

{ "title": 1, "author": 1 }, { "dob": 0, "state": 0 } |

| index | Specifies a set of indexes that should be created for the collection. See Creating Database Indexes for more detail. | [] |

{ "keys": { "username": 1 }, "options": { "unique": true } } |

Overriding configuration using querystring parameters

It is possible to override some of these values when requesting data from the endpoint, by using querystring parameters. See Querying a collection for detailed documentation.

Anchor link Collection configuration endpoints

Every collection in your API has an additional configuration route available. To use it, append /config to one of your collection endpoints, for example: https://api.somedomain.tech/1.0/libray/books/config.

Making a GET request to the collection's configuration endpoint returns the collection schema:

GET /1.0/library/books/config HTTP/1.1

Content-Type: application/json

Authorization: Bearer 37f9786b-3f39-4c87-a8ff-9530efd176c3

Host: api.somedomain.tech

Connection: close

HTTP/1.1 200 OK

Content-Type: application/json

content-length: 12639

Date: Mon, 18 Sep 2017 14:05:44 GMT

Connection: close

{

"fields": {

"published": {

"type": "Object",

"label": "Published State",

"required": true

}

},

"settings": {

}

}

Anchor link The REST API

The primary way of interacting with DADI API is via REST endpoints that are automatically generated for each of the collections added to the application. Each REST endpoint allows you to insert, update, delete and query data stored in the underlying database.

Anchor link REST endpoint format

http(s)://api.somedomain.tech/{version}/{database}/{collection name}

The REST endpoints follow the above format, where {version} is the current version of the API collections (not the installed version of API), {database} is the database that holds the specified collection and {collection name} is the actual collection to interact with. See Collections directory for more detail.

Example endpoints for each of the supported HTTP verbs:

# Insert documents

POST /1.0/my-database/my-collection

# Update documents

PUT /1.0/my-database/my-collection

# Delete documents

DELETE /1.0/my-database/my-collection

# Get documents

GET /1.0/my-database/my-collection

Anchor link Content-type header

In almost all cases, the Content-Type header should be application/json. API contains some internal endpoints which allow text/plain but for all interaction using the above endpoints you should use application/json.

Anchor link Authorization header

Unless a collection has authentication disabled, every request using the above REST endpoints will require an Authorization header containing an access token. See Obtaining an Access Token for more detail.

Anchor link Working with data

Anchor link Retrieving data

Sending a request using the GET method instructs API to find and retrieve all documents that match a certain criteria.

There are two types of retrieval operation: one where a single document is to be retrieved and its identifier is known; and the other where one or many documents matching a query should be retrieved.

Anchor link Retrieve a single resource by ID

To retrieve a document with a known identifier, add the identifier to the REST endpoint for the collection.

Anchor link Request

Format: GET http://api.somedomain.tech/1.0/library/books/{ID}

GET /1.0/library/books/560a44b33a4d7de29f168ce4 HTTP/1.1

Authorization: Bearer afd4368e-f312-4b14-bd93-30f35a4b4814

Content-Type: application/json

Host: api.somedomain.tech

Retrieves the document with the identifier of {ID} from the specified collection (in this example books).

Anchor link Retrieve all documents matching a query

Useful for retrieving multiple documents that have a common property or share a pattern. Include the query in the querystring using the filter parameter.

Anchor link Request

Format: GET http://api.somedomain.tech/1.0/library/books?filter={QUERY}

GET /1.0/library/books?filter={"title":{"$regex":"the"}} HTTP/1.1

Authorization: Bearer afd4368e-f312-4b14-bd93-30f35a4b4814

Content-Type: application/json

Host: api.somedomain.tech

Anchor link Query options

When querying a collection, the following options can be supplied as URL parameters:

| Property | Description | Default | Example |

|---|---|---|---|

compose |

Whether to resolve referenced documents (see the possible values of the compose parameter) |

The value of settings.compose in the collection schema |

compose=true |

count |

The maximum number of documents to be retrieved in one page | The value of settings.count in the collection schema |

count=30 |

fields |

The list of fields to include or exclude from the response. Takes an object mapping field names to either 1 or 0, which will include or exclude the field, respectively. |

The value of settings.compose in the collection schema |

fields={"first_name":1,"l_name":1} |

filter |

A query to filter results by. See filtering documents for more detail. | The value of settings.compose in the collection schema |

fields={"first_name":1,"l_name":1} |

version |

The ID of a particular revision of a document to retrieve. Applicable only when retrieving a single document by ID and the collection being queried has document versioning enabled. | null |

version=5c54612ed10f781ca6fff604 |

page |

The number of the page of results to retrieve | 1 |

page=3 |

sort |

The sort direction to sort results by, mapping field names to either 1 or 0, which will sort results by that field in ascending or descending order, respectively |

The value of settings.sortOrder in the collection schema |

sort={"first_name":1} |

Anchor link Filtering documents

DADI API uses a MongoDB-style format for querying objects, introducing a series of operators that allow powerful queries to be assembled.

| Syntax | Description | Example |

|---|---|---|

{field:value} |

Strict comparison. Matches documents where the value of field is exactly value |

{"first_name":"John"} |

{field:{"$regex": value}} |

Matches documents where the value of field matches a regular expression defined as /value/i |

{"first_name":{"$regex":John"}} |

{field:{"$in":[value1,value2]}} |

Matches documents where the value of field is one of value1 and value2 |

{"last_name":{"$in":["Doe","Spencer","Appleseed"]}} |

{field:{"$containsAny":[value1,value2]}} |

Matches documents where the value of field (an array) contains one of value1 and value2 |

{"tags":{"$containsAny":["dadi","dadi-api","restful"]}} |

{field:{"$gt": value}} |

Matches documents where the value of field is greater than value |

{"height":{"$gt":175}} |

{field:{"$lt": value}} |

Matches documents where the value of field is less than value |

{"weight":{"$lt":85}} |

{field:"$now"}, {field:{"$lt":"$now"}}, etc. |

(DateTime fields only) Matches documents comparing the value of field against the current date |

{"publishDate":{"$lt":"$now"}} |

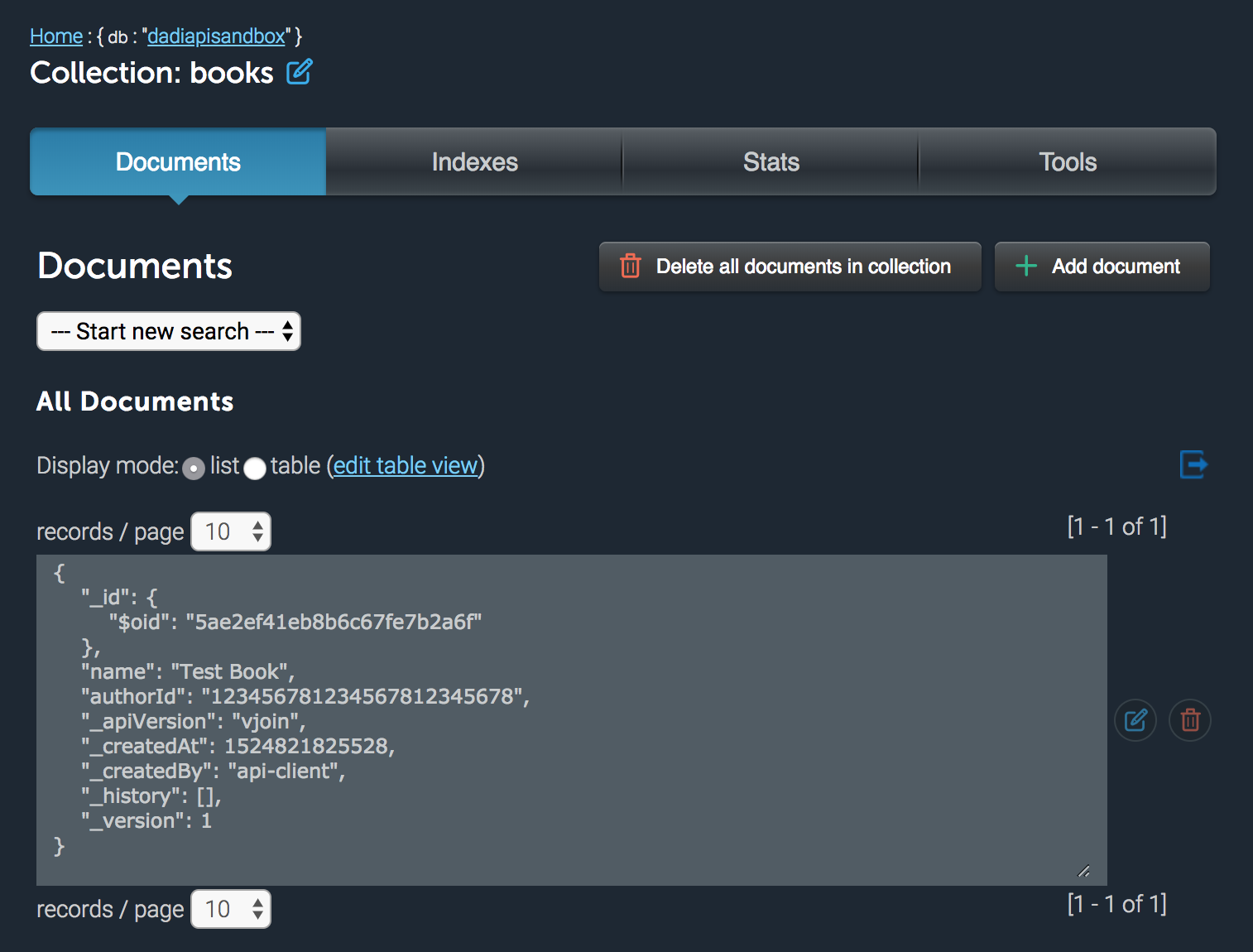

Anchor link Inserting data

Inserting data involves sending a POST request to the endpoint for the collection that will store the data. If the data passes validation rules imposed by the collection, it is inserted into the collection with a set of internal fields added.

Anchor link Request

Format: POST http://api.somedomain.tech/1.0/library/books

POST /1.0/library/books HTTP/1.1

Authorization: Bearer afd4368e-f312-4b14-bd93-30f35a4b4814

Content-Type: application/json

Host: api.somedomain.tech

{

"title": "The Old Man and the Sea"

}

Anchor link Response

{

"results": [

"_id": "5ae1b6464e0b766dd17dbab9"

"_apiVersion": "1.0",

"_createdAt": 1511875141,

"_createdBy": "your-client-id",

"_version": 1,

"title": "The Old Man and the Sea"

]

}

Anchor link Common validation errors

In addition to failures caused by validation rules in collection field specifications, you may also receive an HTTP 400 Bad Request error if either required fields are missing or extra fields are sent that don't exist in the collection:

HTTP/1.1 400 Bad Request

Content-Type: application/json

content-length: 681

Date: Mon, 18 Sep 2017 18:21:04 GMT

Connection: close

{

"success": false,

"errors": [

{

"field": "description",

"message": "can't be blank"

},

{

"field": "extra_field",

"message": "doesn't exist in the collection schema"

}

]

}

Anchor link Batch inserting documents

It is possible to insert multiple documents in a single POST request by sending an array to the endpoint:

POST /1.0/library/books HTTP/1.1

Authorization: Bearer afd4368e-f312-4b14-bd93-30f35a4b4814

Content-Type: application/json

Host: api.somedomain.tech

[

{

"title": "The Old Man and the Sea"

},

{

"title": "For Whom the Bell Tolls"

}

]

Anchor link Updating data

Updating data with API involves sending a PUT request to the endpoint for the collection that holds the data.

There are two types of update operation: one where a single document is to be updated and its identifier is known; and the other where one or many documents matching a query should be updated.

In both cases, the request body must contain the required update specified as JSON.

If the data passes validation rules imposed by the collection, it is updated using the specified update, and the internal fields _lastModifiedAt, _lastModifiedBy and _version are updated.

Anchor link Update an existing resource

To update a document with a known identifier, add the identifier to the REST endpoint for the collection.

Anchor link Request

Format: PUT http://api.somedomain.tech/1.0/library/books/{ID}

PUT /1.0/library/books/560a44b33a4d7de29f168ce4 HTTP/1.1

Authorization: Bearer afd4368e-f312-4b14-bd93-30f35a4b4814

Content-Type: application/json

Host: api.somedomain.tech

{

"update": {

"title": "For Whom the Bell Tolls (Kindle Edition)"

}

}

Updates the document with the identifier of {ID} in the specified collection (in this example books). Applies the values from the update block specified in the request body.

Anchor link Response

{

"results": [

{

"_apiVersion": "v1",

"_createdAt": 1524741702962,

"_createdBy": "testClient",

"_id": "5ae1b6464e0b766dd17dbab9",

"_lastModifiedAt": 1524741826339,

"_lastModifiedBy": "testClient",

"_version": 2,

"title": "For Whom the Bell Tolls (Kindle Edition)"

}

],

"metadata": {

"fields": {},

"page": 1,

"offset": 0,

"totalCount": 1,

"totalPages": 1

}

}

Anchor link Update all documents matching a query

Useful for batch updating documents that have a common property. Include the query in the request body, along with the required update.

Anchor link Request

Format: PUT http://api.somedomain.tech/1.0/library/books

PUT /1.0/library/books HTTP/1.1

Authorization: Bearer afd4368e-f312-4b14-bd93-30f35a4b4814

Content-Type: application/json

Host: api.somedomain.tech

{

"query": {

"title": {

"$regex": "the"

}

},

"update": {

"available": false

}

}

Updates all documents that match the results of the query in the specified collection (in this example "books"). Applies the values from the update block specified in the request body.

Anchor link Response

{

"results": [

{

"_apiVersion": "v1",

"_createdAt": 1524741702962,

"_createdBy": "testClient",

"_id": "5ae1b6464e0b766dd17dbab9",

"_lastModifiedAt": 1524741826339,

"_lastModifiedBy": "testClient",

"_version": 2,

"title": "For Whom the Bell Tolls (Kindle Edition)",

"available": false

},

{

"_apiVersion": "v1",

"_createdAt": 1524741702962,

"_createdBy": "testClient",

"_id": "5ae1b6464e0b766dd17dbab8",

"_lastModifiedAt": 1524741826339,

"_lastModifiedBy": "testClient",

"_version": 1,